Implementing DevOps in AWS

What is DevOps?

-An integrated approach that merges software development and IT operations to enhance collaboration, communication, and effectiveness across the entire software development lifecycle.

The key principles of DevOps include:

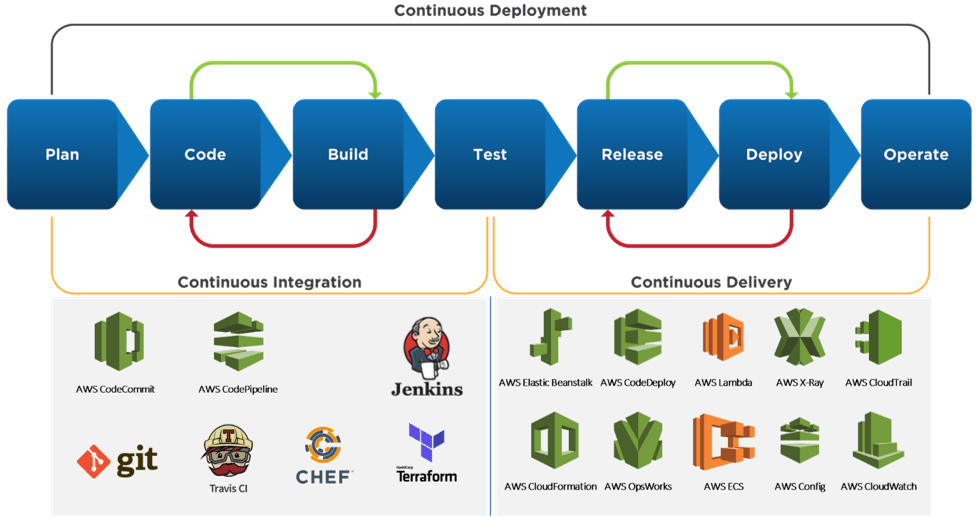

Continuous Integration and Continuous Delivery (CI/CD)

Infrastructure as Code (IaC)

Automation

Monitoring and Feedback

AWS Services for DevOps:

How AWS furnishes a resilient foundation for implementing DevOps practices:

AWS provides an extensive range of services and functionalities that facilitate the adoption of DevOps methodologies. It furnishes the essential tools and infrastructure to automate, scale, and monitor applications, fostering collaboration and empowering organizations to expedite software delivery, enhance quality, and optimize efficiency.

Let's see how we can use AWS services for DevOps.

AWS services that support the DevOps lifecycle:

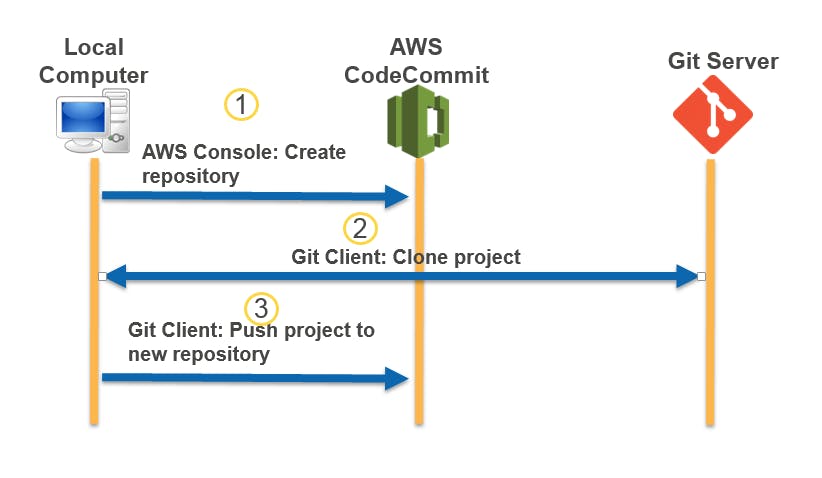

AWS CodeCommit: CodeCommit is a managed source code repository service. It provides a secure and scalable platform for hosting your Git repositories. Teams can collaborate on code, manage branches, and easily integrate with other AWS DevOps services. CodeCommit supports features like access control, branch policies, and integration with CI/CD pipelines.

Purpose: CodeCommit is a managed source code repository service that allows developers to securely store and version control their code.

Benefits:

Centralized code repository: CodeCommit provides a secure and scalable platform for storing code, making it easily accessible to development teams.

Version control and collaboration: Developers can track changes, manage branches, and collaborate effectively, enabling seamless code collaboration and ensuring code integrity.

Integration with CI/CD: CodeCommit seamlessly integrates with other AWS DevOps services, such as CodePipeline and CodeBuild, enabling smooth code integration into the CI/CD pipeline.

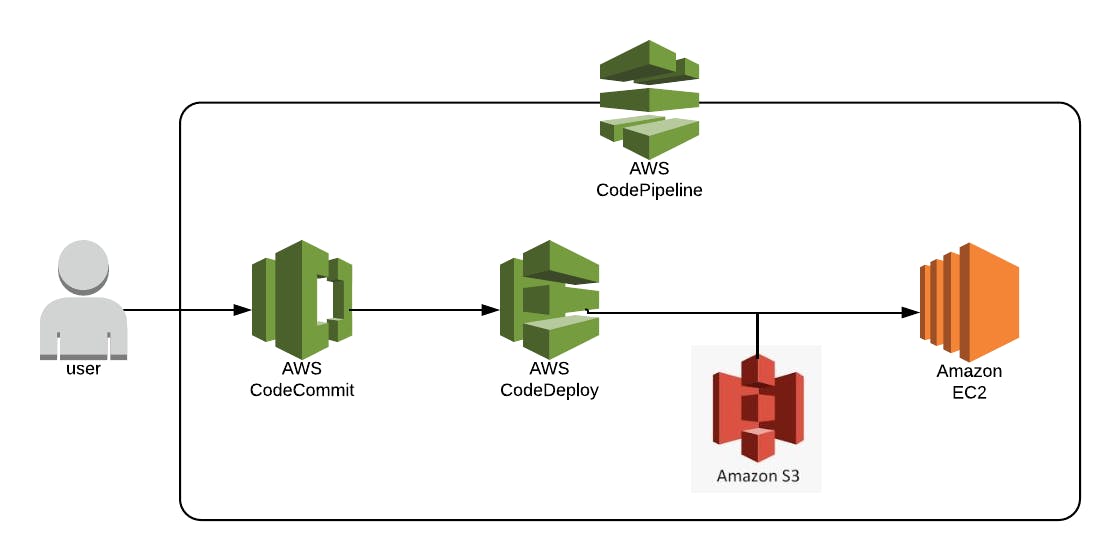

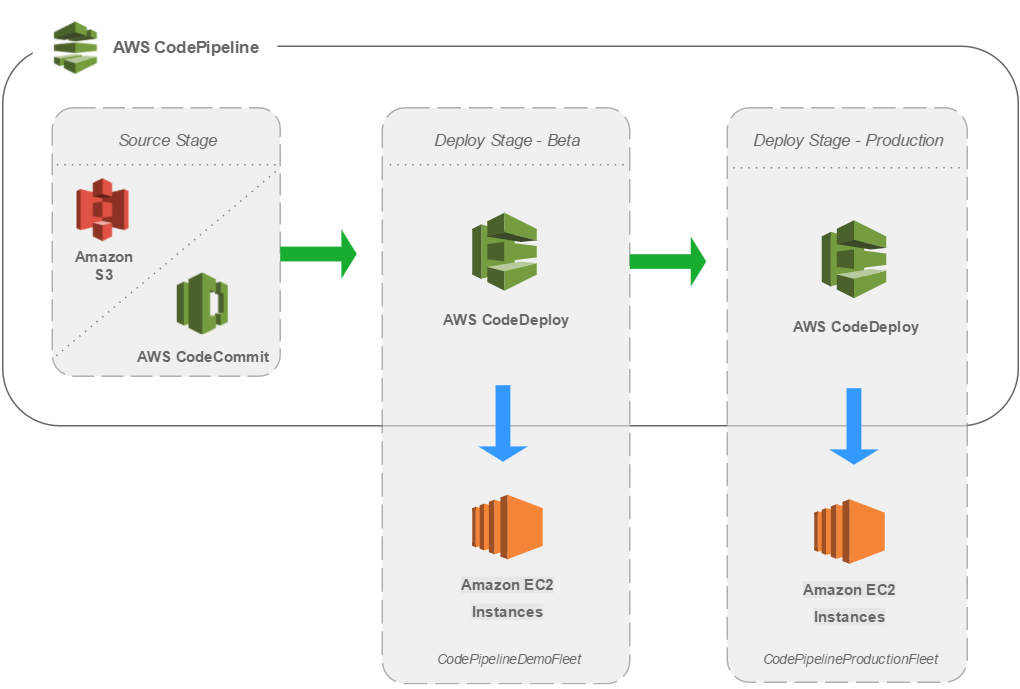

AWS CodePipeline: CodePipeline is a fully managed continuous integration and continuous delivery (CI/CD) service. It enables you to create end-to-end automated release pipelines for your applications. CodePipeline allows you to define stages, such as source code retrieval, build, test, and deployment and integrates with various AWS and third-party tools to automate the entire software release process.

Purpose: CodePipeline is a fully managed CI/CD service that helps automate software release pipelines.

Benefits:

Automated workflow: CodePipeline allows developers to define and automate the entire release process, from source code retrieval to deployment.

Continuous integration and delivery: It supports continuous integration by triggering build and test stages whenever changes are detected in the code repository. It facilitates continuous delivery by automating the deployment process.

Flexibility and extensibility: CodePipeline integrates with various AWS services and third-party tools, allowing customization and extending the pipeline's capabilities based on specific requirements.

Visual pipeline view: CodePipeline provides a visual representation of the pipeline stages, enabling easy monitoring and troubleshooting.

AWS CodeBuild: CodeBuild is a fully managed build service that compiles source code, runs tests, and produces deployable artifacts. It supports popular programming languages and building tools, and it can be easily integrated with CodeCommit, CodePipeline, and other AWS services. CodeBuild eliminates the need for managing to build infrastructure and scales automatically based on workload requirements.

Purpose: CodeBuild is a fully managed build service that compiles source code, runs tests, and generates deployment artifacts.

Benefits:

Automated build process: CodeBuild automates the build process, freeing developers from managing infrastructure and build environments.

Scalability and efficiency: CodeBuild scales automatically to accommodate the building workload, ensuring fast and efficient builds.

Integration with CI/CD: CodeBuild integrates seamlessly with CodePipeline, allowing developers to include build stages in the CI/CD workflow.

Extensibility and customization: It supports various programming languages and builds tools, providing flexibility to tailor the build process to specific project requirements.

AWS CodeDeploy: CodeDeploy automates application deployments to EC2 instances, on-premises servers, or serverless Lambda functions. It helps ensure smooth and error-free deployments by using various deployment strategies, such as rolling updates and blue/green deployments. CodeDeploy integrates with other DevOps tools and supports deployments from multiple sources, including CodeCommit and CodePipeline.

Purpose: CodeDeploy automates the deployment of applications to EC2 instances, on-premises servers, or Lambda functions.

Benefits:

Automated and consistent deployments: CodeDeploy eliminates the need for manual deployments, ensuring consistency and reducing human error.

Deployment strategies: It supports different deployment strategies, such as rolling updates and blue/green deployments, providing flexibility and minimizing downtime.

Application health monitoring: CodeDeploy monitors the health of instances during and after deployment, automatically rolling back if issues are detected.

Integration with CI/CD: CodeDeploy seamlessly integrates with CodePipeline, allowing automated deployments as part of the CI/CD workflow.

These services collaborate to establish an efficient and automated DevOps pipeline:

Developers can securely store and manage their code in CodeCommit repositories.

CodePipeline serves as the orchestrator for the CI/CD workflow, seamlessly integrating with CodeCommit as the source code provider.

CodeBuild performs code compilation, executes tests, and generates deployment artifacts as part of the build phase within CodePipeline.

CodeDeploy automates application deployment to EC2 instances or Lambda functions, adhering to the designated deployment strategy.

By harnessing these AWS services, development teams can attain streamlined and automated software delivery, resulting in accelerated iterations, heightened quality, and enhanced collaboration between development and operations teams.

By leveraging these services together, development teams can achieve the following benefits:

Faster iterations: Continuous integration ensures that code changes are frequently integrated, facilitating faster feedback and reducing integration issues.

Higher quality: Continuous testing and automated builds enable quick detection of issues, ensuring software quality and reducing the risk of introducing bugs.

Efficient and automated deployment: Automated deployment pipelines enable faster and error-free deployments, reducing manual effort and minimizing downtime.

Improved collaboration: These services promote collaboration between development and operations teams, breaking down silos and fostering a shared responsibility for software delivery.

Scalability and flexibility: AWS services scale automatically based on workload demands, allowing teams to handle increased development and deployment requirements effectively.

How these services integrate with popular DevOps tools like Jenkins, Git, and Docker.

AWS services such as CodeCommit, CodePipeline, CodeBuild, and CodeDeploy integrate seamlessly with popular DevOps tools like Jenkins, Git, and Docker, enabling teams to leverage their existing toolsets and workflows. Here's how these AWS services integrate with each of these tools:

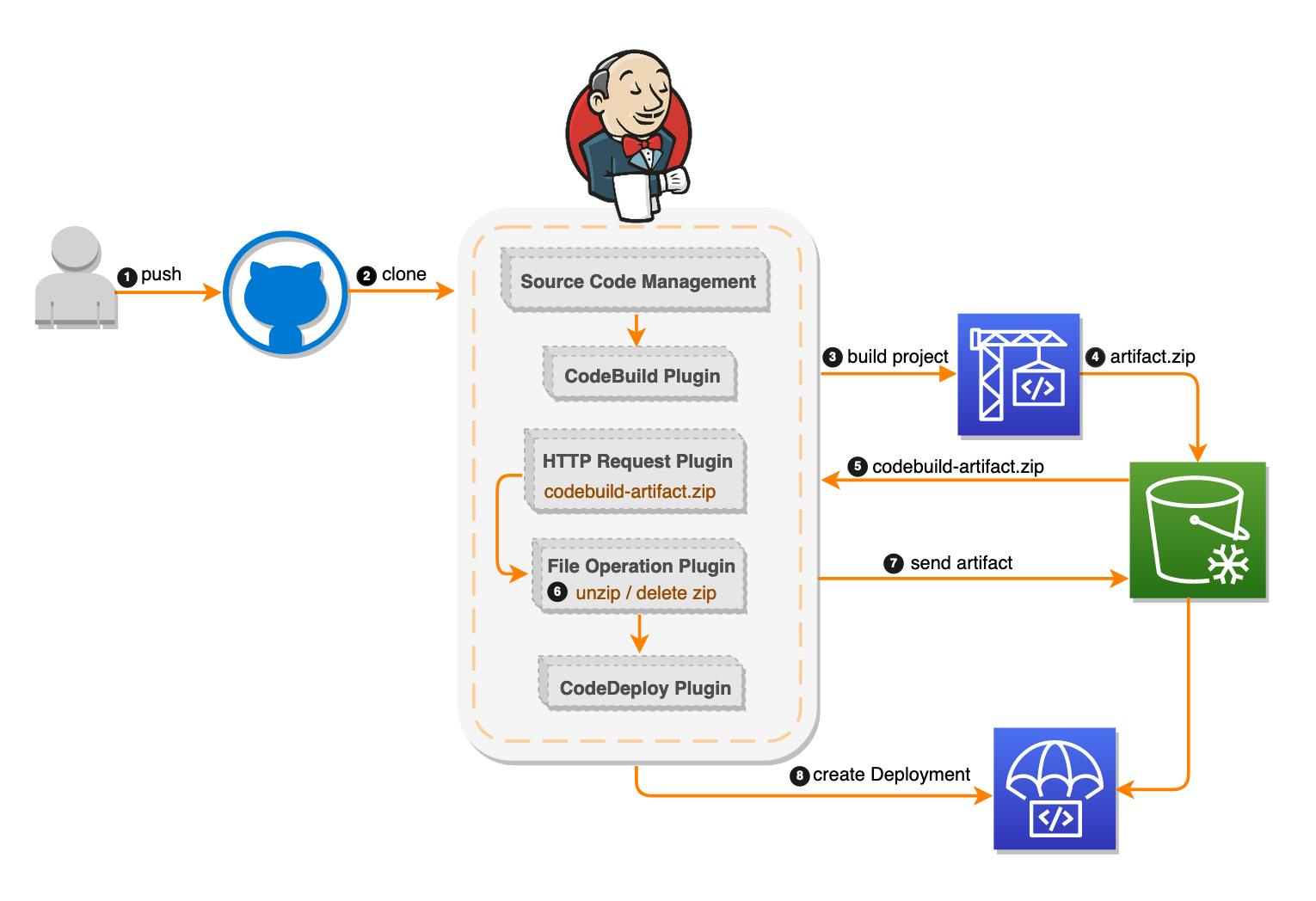

Integration with Jenkins:

CodeCommit: Jenkins can be configured to fetch source code from CodeCommit repositories. It can trigger build jobs whenever changes are detected in the repository, allowing for continuous integration.

CodePipeline: Jenkins can be integrated as a custom action in CodePipeline, allowing you to incorporate Jenkins build jobs within the pipeline stages. This enables you to leverage the flexibility and extensibility of Jenkins for specific build requirements.

CodeBuild: Jenkins can trigger CodeBuild jobs as part of the build process. This integration allows you to use Jenkins as the orchestrator while leveraging CodeBuild's managed build environment and scalability.

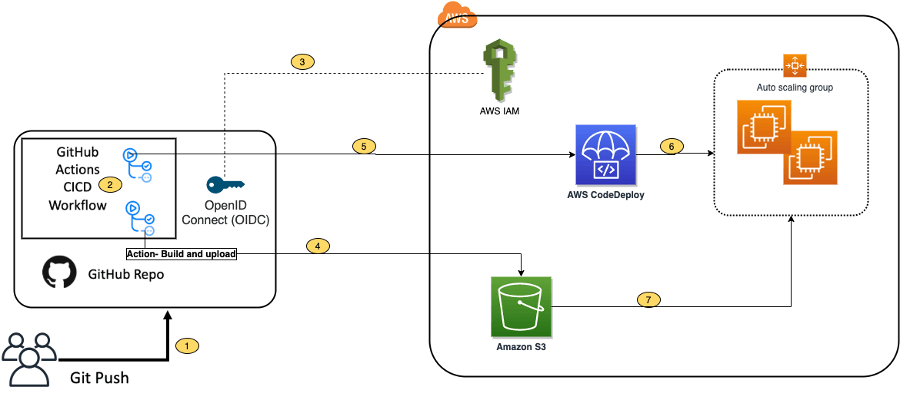

Integration with Git:

CodeCommit: CodeCommit is a fully-managed Git repository service. It supports standard Git commands and protocols, making it easy to integrate with Git-based workflows. Developers can push, pull, and clone repositories using Git clients and tools.

CodePipeline: CodePipeline can be configured to use Git as the source code provider. It can detect changes in Git repositories and trigger the subsequent stages in the pipeline, such as building and deploying the application.

CodeBuild: CodeBuild has built-in integration with Git. It can automatically fetch the source code from Git repositories and perform the build process. CodeBuild supports authentication mechanisms for accessing private Git repositories.

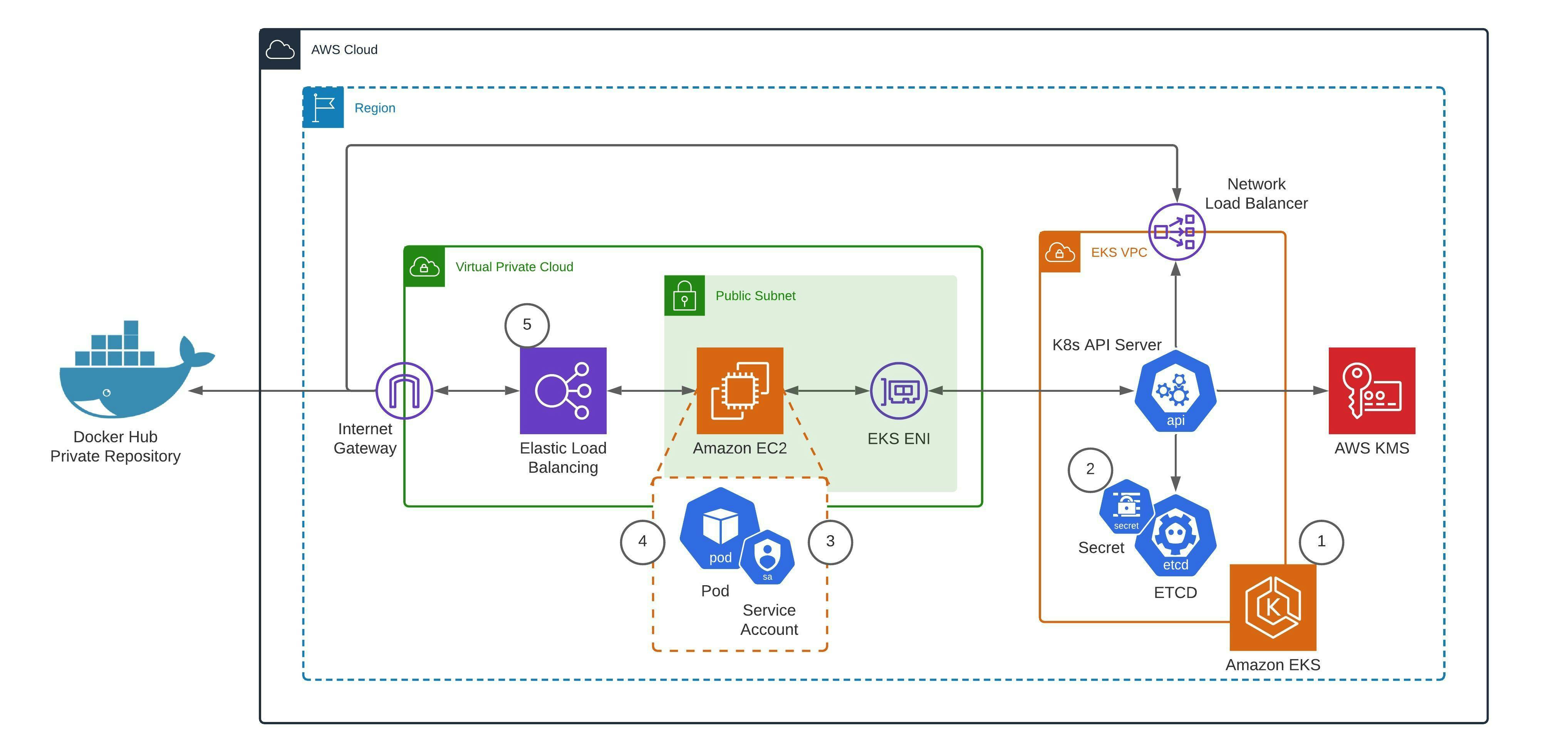

Integration with Docker:

CodeBuild: CodeBuild provides native support for building Docker images. You can use a build specification file or a build environment to define the necessary steps to build, test, and package Docker containers. This allows you to easily incorporate Docker-based builds into your CI/CD pipeline.

CodePipeline: CodePipeline can integrate with Docker registries such as Amazon Elastic Container Registry (ECR). You can configure pipeline stages to push Docker images to the registry as part of the deployment process. This enables seamless integration with Docker-based deployments.

CodeDeploy: CodeDeploy can deploy applications packaged in Docker containers to EC2 instances or ECS clusters. It supports deployment strategies specific to containers, such as rolling updates or blue/green deployments, ensuring smooth and efficient deployment of Dockerized applications.

By integrating with these popular DevOps tools, AWS services provide flexibility and interoperability, allowing teams to leverage their preferred tools and workflows while benefiting from the scalable infrastructure and automation capabilities of AWS.

Continuous Integration (CI) with AWS:

Concept of continuous integration and its role in DevOps:

Continuous Integration (CI) is a software development practice that emphasizes frequent and automated integration of code changes into a shared repository. It involves merging individual developers' code changes into a central repository multiple times a day, followed by automated build and testing processes to validate the changes. The key principles of continuous integration include:

Frequent Code Integration: Developers regularly integrate their code changes into a shared repository, ensuring that changes are synchronized with the main codebase as early as possible.

Automated Build and Testing: After code integration, an automated build process is triggered to compile the code, package it, and run a series of automated tests to verify its correctness. This ensures that the integrated code does not break the existing functionality.

Fast Feedback Loop: Continuous integration provides quick feedback to developers about the quality and compatibility of their code changes. It helps detect and resolve integration issues early in the development process.

The role of continuous integration in DevOps is significant and supports the following objectives:

Reduced Integration Risks: By integrating code changes frequently, continuous integration reduces the risk of conflicts and integration issues that can arise when developers work in isolation for extended periods. It allows for early detection and resolution of integration problems, preventing the accumulation of code conflicts.

Early Bug Detection: Automated build and testing processes in continuous integration help catch bugs and issues quickly. By identifying issues early in the development cycle, teams can address them promptly, reducing the time and effort required for bug fixing.

Accelerated Feedback Loop: Continuous integration provides developers with rapid feedback on the impact of their code changes. This immediate feedback loop allows for the early identification of issues, enabling faster iterations and reducing the time between making changes and receiving feedback.

Improved Collaboration: Continuous integration promotes collaboration among development team members. By frequently integrating their code changes, developers can work more closely together, share knowledge, and resolve conflicts promptly.

Higher Software Quality: The continuous integration process ensures that the codebase remains in a stable and working state. Regular automated testing helps catch errors and regressions early, resulting in higher software quality.

Enabler for Continuous Delivery and Deployment: Continuous integration lays the foundation for continuous delivery and deployment practices. It provides a reliable and consistent process for integrating and testing code changes, which can then be seamlessly integrated into automated deployment pipelines.

By adopting continuous integration as part of the DevOps approach, organizations can foster collaboration, improve code quality, accelerate development cycles, and ultimately deliver software more efficiently and reliably.

An example CI pipeline using AWS services, highlighting the integration and automation aspects:

Here's a simplified illustration of the CI pipeline:

Source Code Management:

Developers use Git and store their code in AWS CodeCommit repositories.

Each developer creates a feature branch and commits their changes to the repository.

Continuous Integration Pipeline:

Trigger: CodePipeline is configured to monitor the CodeCommit repository for changes.

Stage 1: Source

CodePipeline detects a new commit in the repository.

It retrieves the latest source code from CodeCommit.

Stage 2: Build

CodePipeline triggers CodeBuild, which is configured with build specifications.

CodeBuild automatically provisions a build environment and performs the build process.

Build output (artifacts) are generated and stored in an artifact repository.

Stage 3: Test

CodePipeline can trigger various testing tools and services, such as unit tests, integration tests, or third-party testing frameworks.

Automated tests are executed against the built artifacts to ensure code quality.

Stage 4: Deployment (Optional)

CodePipeline can be configured to deploy the application to a test environment, such as an EC2 instance or an AWS Lambda function.

CodeDeploy is integrated to automate the deployment process, ensuring consistency and minimizing human error.

Stage 5: Notification

- CodePipeline can send notifications or trigger alerts to relevant stakeholders, such as email notifications or integration with messaging services like Amazon SNS or Slack.

Post-Build Actions:

- The CI pipeline can be configured to perform additional actions, such as archiving build artifacts, publishing build reports, or triggering downstream processes like CD pipelines.

By using AWS services, this CI pipeline exhibits the following integration and automation aspects:

Integration with CodeCommit: CodePipeline seamlessly integrates with CodeCommit as the source code provider, allowing for the automated detection of code changes and triggering the pipeline execution.

Build Automation with CodeBuild: CodeBuild automates the build process by automatically provisioning build environments, fetching the source code from CodeCommit, and executing the build specifications.

Testing Automation: CodePipeline can integrate with various testing tools and services to automate the testing phase, ensuring code quality and identifying issues early in the pipeline.

Deployment Automation with CodeDeploy: CodePipeline can be configured to deploy the application to a test environment using CodeDeploy, automating the deployment process and ensuring consistency.

End-to-end Automation: The entire CI pipeline is automated, triggered by code changes and progressing through the stages without manual intervention. This enables fast and consistent feedback on code changes.

Flexibility and Extensibility: AWS services allow for customization and integration with other tools and services, enabling teams to tailor the pipeline to their specific requirements and integrate with their preferred testing and deployment tools.

This example CI pipeline showcases how AWS services seamlessly integrate and automate the different stages of the CI process, enabling development teams to achieve efficient and reliable software delivery.

Continuous Delivery (CD) with AWS:

Introduce continuous delivery and its significance in DevOps.

Continuous Delivery (CD) is a software development practice that extends the principles of continuous integration (CI) to enable the rapid and automated delivery of software to production environments. It focuses on automating the entire release process, from code integration to deployment, to make software delivery more frequent, predictable, and reliable. The key principles of continuous delivery include:

Automated Deployment: Continuous delivery emphasizes the use of automation to deploy software to various environments, including development, testing, staging, and production. The deployment process is repeatable, reliable, and requires minimal manual intervention.

Continuous Testing: CD promotes continuous testing throughout the software development lifecycle. It includes various types of testing, such as unit tests, integration tests, acceptance tests, and performance tests, to ensure the quality and correctness of the software.

Configuration Management: Continuous delivery advocates for managing the application's configuration as code. This approach ensures that the deployment artifacts are consistent across environments and allows for easy configuration changes as part of the deployment process.

Release Orchestration: CD emphasizes the importance of release orchestration, where different stages of the deployment pipeline are coordinated, and dependencies are managed to ensure smooth and reliable software releases.

The significance of continuous delivery in DevOps is substantial, as it brings several benefits to software development and operations:

Reduced Time-to-Market: Continuous delivery enables faster software releases, allowing organizations to deliver value to customers more frequently. It shortens the time from development to deployment, accelerating the feedback loop and enabling quicker iterations.

Improved Reliability: By automating the deployment process and ensuring consistency across environments, continuous delivery reduces the risk of human errors and misconfigurations. It increases the reliability of software releases, making them more predictable and repeatable.

Higher Quality: Continuous delivery promotes a rigorous testing approach, where tests are executed continuously throughout the development process. This leads to the early detection of bugs, issues, and regressions, resulting in higher software quality.

Enhanced Collaboration: Continuous delivery fosters collaboration between development and operations teams. Automating and streamlining the release process, encourages shared responsibility, transparency, and effective communication between teams.

Rapid Feedback Loop: Continuous delivery provides quick feedback on the impact of code changes, allowing teams to identify and address issues early in the development cycle. This rapid feedback loop enables faster iterations and the ability to iterate based on user feedback.

Scalability and Flexibility: Continuous delivery is highly scalable and adaptable to evolving requirements. It allows organizations to handle increased software releases, easily accommodate changes, and adapt to different deployment environments and architectures.

Continuous delivery, when combined with continuous integration and other DevOps practices, enables organizations to build a culture of continuous improvement and innovation. It establishes a reliable and efficient software delivery pipeline, empowering teams to deliver high-quality software more frequently and with greater confidence.

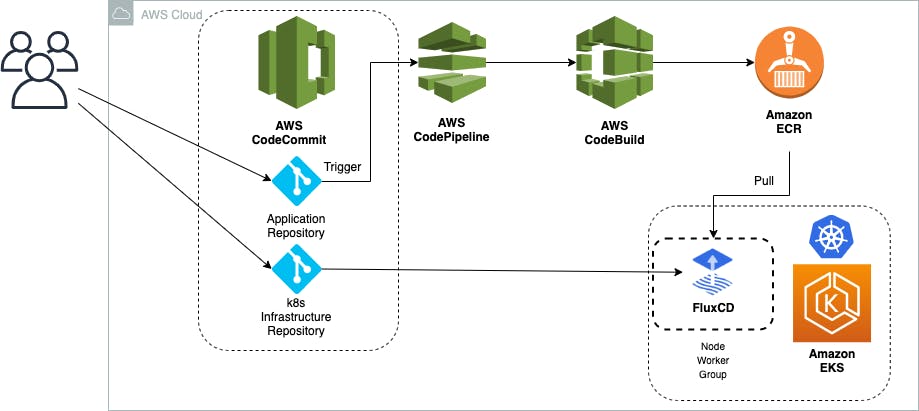

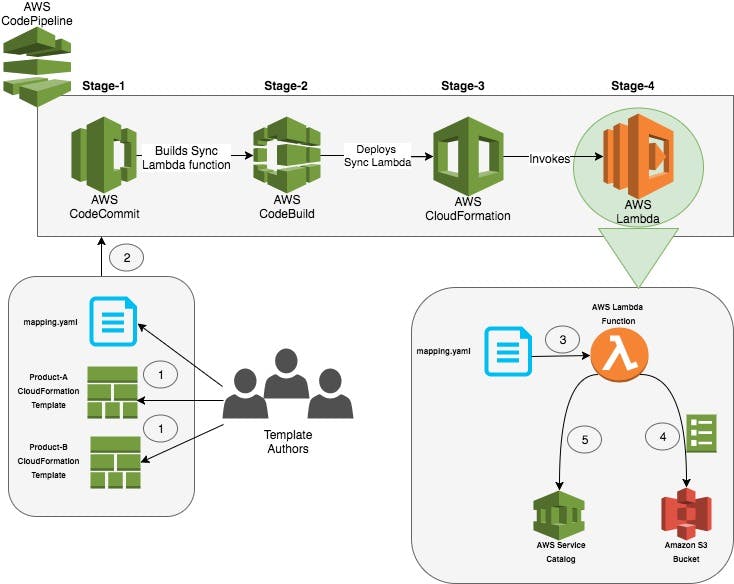

End-to-end CD pipeline using AWS services, covering stages like build, test, and deploy.

End-to-end Continuous Delivery (CD) pipeline using AWS services. This pipeline will cover stages such as build, test, and deploy, utilizing various AWS services. Here's an overview of the pipeline:

Source Code Management:

Developers store and version control their code in AWS CodeCommit repositories.

They work on feature branches and push their changes to the repository.

Continuous Integration:

CI pipeline, as discussed earlier, is triggered by code changes in CodeCommit.

CodePipeline integrates with CodeCommit and CodeBuild for automated build and testing processes.

CodeBuild compiles the code, runs tests, and generates build artifacts.

Continuous Testing:

- CodePipeline triggers the execution of various tests, such as unit tests, integration tests, and acceptance tests, using tools like AWS CodeBuild, AWS Lambda, or third-party testing frameworks.

Artifact Repository:

Build artifacts are stored in an artifact repository, such as AWS S3, AWS ECR, or a custom repository.

These artifacts are the output of the build and testing stages and will be used for deployment.

Infrastructure Provisioning:

- Infrastructure as Code (IaC) tools, such as AWS CloudFormation or AWS CDK, are used to provide the necessary infrastructure resources for deployment, such as EC2 instances, AWS Lambda functions, or ECS clusters.

Configuration Management:

- Configuration management tools, like AWS Systems Manager Parameter Store or AWS Secrets Manager, are utilized to manage and store application configurations securely.

Deployment Automation:

CodePipeline integrates with AWS CodeDeploy for automated application deployments.

CodeDeploy uses the build artifacts and deploys the application to the target environment, following the defined deployment strategy.

Deployment strategies can include rolling updates, blue/green deployments, or canary releases.

Post-Deployment Testing:

- CodePipeline can trigger additional post-deployment tests, such as smoke tests or end-to-end tests, to verify the functionality and health of the deployed application.

Approval and Release Orchestration:

CodePipeline can include manual approval stages, where designated stakeholders review and approve the release before proceeding to the next stage.

Release orchestration is managed by CodePipeline, coordinating the various stages and dependencies.

Monitoring and Feedback:

Monitoring tools, like Amazon CloudWatch or AWS X-Ray, can be integrated to monitor the application's performance, logs, and user feedback.

Any issues or performance anomalies can trigger alerts and notifications to relevant teams.

By leveraging AWS services, this end-to-end CD pipeline showcases the following aspects:

Automation: The entire pipeline is automated, triggered by code changes, and progresses through various stages without manual intervention.

Scalability and Flexibility: AWS services allow for scalability and adaptability, accommodating changes in infrastructure and deployment environments.

Integration and Interoperability: AWS services integrate seamlessly, enabling the use of different services for source control, build, testing, deployment, and monitoring.

Infrastructure as Code: The pipeline includes infrastructure provisioning using IaC tools, allowing for repeatable and consistent infrastructure setups.

Release Orchestration: CodePipeline coordinates the release process, managing dependencies and allowing for manual approvals when necessary.

Continuous Feedback: Monitoring and feedback mechanisms are integrated to provide insights into the application's performance and user experience.

This end-to-end CD pipeline with AWS services streamlines and automates the software delivery process, enabling organizations to achieve faster, more reliable, and consistent deployments while maintaining high-quality standards.

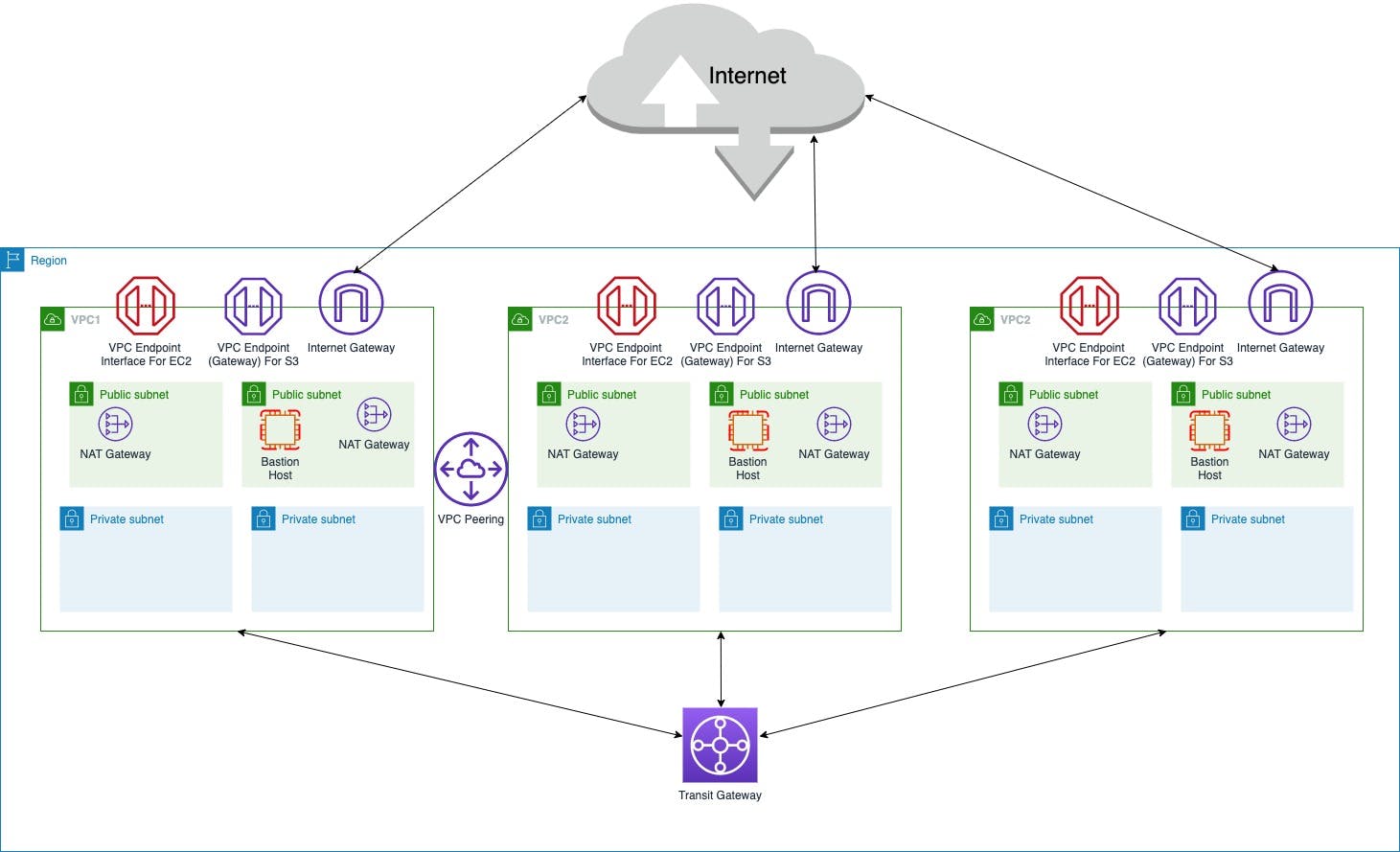

Infrastructure as Code (IaC) with AWS:

benefits of Infrastructure as Code in achieving infrastructure consistency and repeatability:

Infrastructure as Code (IaC) is a practice that involves managing and provisioning infrastructure resources, such as servers, networks, and databases, using machine-readable configuration files or scripts. These configuration files are treated as code and can be version controlled, tested, and deployed alongside application code. The benefits of Infrastructure as Code in achieving infrastructure consistency and repeatability are as follows:

Consistency: With IaC, infrastructure is defined and provisioned through code, ensuring that the desired state of the infrastructure is consistent across all environments. Configuration files specify the exact resources, settings, and dependencies required, eliminating manual configuration drift or human error that may occur when setting up infrastructure manually.

Repeatability: Infrastructure provisioning becomes a repeatable process using IaC. The same configuration files or scripts can be used to provision infrastructure across different environments, such as development, testing, staging, and production. This ensures that the infrastructure setup is consistent and reproducible, reducing the risk of environment-specific issues and inconsistencies.

Version Control and Collaboration: Infrastructure as Code files can be version controlled using tools like Git, enabling tracking of changes, collaboration, and easy rollback if needed. Teams can work collaboratively on infrastructure configurations, review changes, and maintain a history of infrastructure updates. This promotes transparency, accountability, and efficient collaboration among team members.

Automation: IaC allows for the automation of infrastructure provisioning. Infrastructure configurations can be executed programmatically, either through continuous integration/continuous deployment (CI/CD) pipelines or infrastructure orchestration tools like AWS CloudFormation or AWS CDK. Automation minimizes manual intervention, reduces the time required to set up and modify infrastructure, and eliminates potential human errors.

Scalability: Infrastructure as Code enables scaling of infrastructure resources by simply modifying the code or configuration files. It allows for easily replicating infrastructure components, adding more resources, or adjusting resource capacities based on changing application demands. This scalability is achieved by making changes in the code and executing the updated configuration, providing a flexible and efficient way to handle varying workloads.

Auditing and Compliance: Infrastructure configurations defined as code provide an auditable trail of changes and ensure compliance with organizational standards and security policies. The ability to track and review changes in infrastructure code helps in maintaining compliance with regulations and best practices.

Disaster Recovery and High Availability: IaC facilitates disaster recovery and high availability strategies. Infrastructure configurations can be designed to include redundancy, automated backups, and failover mechanisms. In case of a disaster or failure, infrastructure can be quickly replicated or recovered using the same codebase, ensuring business continuity.

By adopting Infrastructure as Code, organizations gain the ability to treat infrastructure provisioning as a software engineering practice. It brings consistency, repeatability, automation, and scalability to infrastructure management, reducing human errors, increasing efficiency, and enabling rapid and reliable deployments.

AWS CloudFormation:

AWS CloudFormation is a service provided by Amazon Web Services (AWS) that enables the provisioning and management of AWS resources using declarative templates.

AWS Elastic BeanStalk:

AWS Elastic Beanstalk is a fully managed service that makes it easy to deploy and run applications in multiple languages.

Example of provisioning and managing infrastructure using AWS CloudFormation or AWS Elastic Beanstalk:

AWS CloudFormation is a service that allows you to define and provision your infrastructure resources in a declarative manner using YAML or JSON templates. These templates describe the desired state of your infrastructure, including resources such as EC2 instances, load balancers, databases, and more. Here's a simplified example:

Create a CloudFormation Template: Create a YAML or JSON file that describes the desired infrastructure resources and their configurations. For example, you might define an EC2 instance, an RDS database, and a load balancer.

Define the Resources: Within the template, define the AWS resources you want to provision, including their properties and dependencies. Specify the desired configuration, such as instance type, security groups, database engine, and storage settings.

Upload the Template: Upload the CloudFormation template to an S3 bucket or directly provide it when creating a CloudFormation stack.

Create a CloudFormation Stack: In the AWS Management Console or using the AWS CLI, create a CloudFormation stack by providing the template location, stack name, and any input parameters required by the template.

Resource Provisioning: CloudFormation orchestrates the creation of the specified resources, ensuring their configuration matches the template. It handles the provisioning of resources, their dependencies, and any necessary configurations, such as security groups and access policies.

Stack Management: CloudFormation provides stack management capabilities, allowing you to update, delete, or roll back the stack to a previous state. You can modify the template, update resource configurations, or add/remove resources as needed.

Infrastructure Updates: When changes are made to the CloudFormation template or stack, CloudFormation performs a change set analysis to determine the required modifications. It can create new resources, update existing ones, or remove unused resources based on the changes.

Automation and Integration: CloudFormation integrates with other AWS services and tools, such as AWS CodePipeline and AWS CodeBuild, to enable continuous delivery and automation of infrastructure provisioning.

Now let's briefly discuss an example using AWS Elastic Beanstalk, another service that simplifies application deployment and management:

Create an Application: Create an Elastic Beanstalk application, specifying the platform (e.g., Node.js, Java, Python) and environment type (e.g., single instance, load-balanced, autoscaling).

Upload Application Code: Package your application code and upload it to Elastic Beanstalk, either through the AWS Management Console, CLI, or an integration with your CI/CD pipeline.

Configure Environment: Set environment-specific configurations such as instance types, scaling options, load balancers, and environment variables. Elastic Beanstalk provides a range of customization options to suit your application requirements.

Launch Environment: Launch an environment within the Elastic Beanstalk application, which provisions the necessary infrastructure resources, including EC2 instances, load balancers, and databases.

Application Deployment: Elastic Beanstalk deploys your application code to the provisioned environment, ensuring proper configuration and dependencies are met.

Environment Management: Elastic Beanstalk provides a management console to monitor the health and performance of your environment. It allows you to scale your application, update configurations, and roll back to previous versions if needed.

Both AWS CloudFormation and AWS Elastic Beanstalk offer powerful capabilities for provisioning and managing infrastructure but with different levels of control and complexity. CloudFormation provides full control over infrastructure resources and their configurations, allowing you to define and manage complex infrastructure setups. Elastic Beanstalk, on the other hand, abstracts away the underlying infrastructure details and provides a simpler way to deploy and manage applications without the need for manual provisioning and configuration.

Monitoring and Logging in AWS:

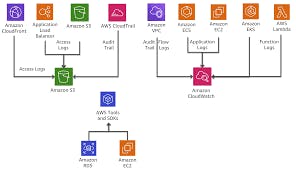

Distributed Monitoring

Centralized Logging

Importance of monitoring and logging in a DevOps environment:

Monitoring and logging play a crucial role in a DevOps environment by providing visibility, insights, and actionable data about the performance, health, and behavior of applications and infrastructure. Here are the key reasons why monitoring and logging are important in a DevOps environment:

Performance and Availability: Monitoring allows teams to track and measure the performance and availability of applications and infrastructure in real-time. It helps identify performance bottlenecks, resource constraints, and potential issues that could impact the user experience. By monitoring metrics such as response times, throughput, and error rates, teams can proactively address performance issues and ensure high availability.

Issue Detection and Troubleshooting: Monitoring and logging provide valuable insights into the behavior and state of systems. When issues or anomalies occur, monitoring alerts and logs help identify the root cause and enable faster troubleshooting. By correlating metrics and logs, teams can pinpoint the specific events or conditions leading to problems and take appropriate actions to resolve them promptly.

Capacity Planning and Optimization: Monitoring data helps in capacity planning by providing visibility into resource utilization and trends. It allows teams to identify potential capacity constraints and plan for scaling infrastructure resources proactively. By analyzing historical data and forecasting future demands, organizations can optimize resource allocation, reduce costs, and ensure smooth performance during peak periods.

Security and Compliance: Monitoring and logging are essential for maintaining security and compliance in a DevOps environment. By monitoring security-related metrics, logs, and events, organizations can detect and respond to potential security breaches or unauthorized access attempts. Logging also helps meet compliance requirements by capturing and retaining audit trails and ensuring accountability.

Continuous Improvement: Monitoring and logging data provide valuable feedback to drive continuous improvement. By analyzing trends, patterns, and performance metrics, teams can identify areas for optimization, implement performance enhancements, and refine the infrastructure and application architecture. Continuous monitoring and analysis enable organizations to iteratively improve their systems, making them more reliable, efficient, and resilient over time.

Collaboration and Communication: Monitoring and logging data serve as a common source of truth, fostering collaboration and effective communication between development, operations, and other stakeholders. Real-time visibility into system health allows teams to share insights, discuss potential improvements, and make informed decisions based on data-driven observations.

Incident Response and Recovery: In the event of incidents or outages, monitoring and logging data help in incident response and recovery efforts. Log data assists in understanding the sequence of events leading up to the incident, facilitating post-incident analysis and remediation. Monitoring tools and alerts enable quick detection of anomalies and trigger appropriate actions for incident response, minimizing downtime and impact on users.

In summary, monitoring and logging are essential components of a DevOps environment as they provide visibility, facilitate issue detection and troubleshooting, support capacity planning and optimization, ensure security and compliance, drive continuous improvement, enable collaboration and aid in incident response and recovery. By leveraging monitoring and logging effectively, organizations can proactively manage their systems, ensure high performance, and deliver reliable and resilient applications to their users.

AWS CloudTrail:

AWS CloudTrail is a service that enables organizations to monitor and log API activity across their AWS infrastructure. It provides a comprehensive audit trail of API calls, allowing organizations to track and review actions taken by users, applications, or AWS services. Here's how AWS CloudTrail helps track API calls and provides an audit trail for compliance and security purposes:

API Activity Logging: AWS CloudTrail captures detailed information about API calls made within an AWS account, including the identity of the caller, the time of the call, the source IP address, the requested API operation, and the response generated. This information is logged and stored in an S3 bucket or sent to CloudWatch Logs for further analysis and monitoring.

Visibility and Accountability: CloudTrail provides visibility into API activity, allowing organizations to see who performed what actions and when. It helps establish accountability by associating API calls with specific AWS identities, such as IAM users, roles, or AWS services.

Compliance and Auditing: CloudTrail aids in meeting compliance requirements by providing an audit trail of API activity. The logged information can be used for internal audits, regulatory compliance, or security investigations. It enables organizations to demonstrate compliance with industry standards and regulations such as PCI DSS, HIPAA, GDPR, and more.

Security Analysis: CloudTrail logs serve as a valuable source of information for security analysis and incident response. By monitoring API activity, organizations can detect and investigate suspicious or unauthorized actions, identify potential security breaches, and take appropriate remedial actions.

Integration with AWS Services: CloudTrail seamlessly integrates with other AWS services, enhancing security and compliance capabilities. It can be used with AWS Identity and Access Management (IAM) to track and monitor IAM actions, AWS CloudFormation to capture changes to infrastructure stacks, AWS Lambda to monitor function invocations, and many other services across the AWS ecosystem.

Real-time Monitoring and Alerting: CloudTrail can be configured to send real-time notifications and alerts for specific API events or patterns. By setting up CloudWatch Events rules, organizations can be immediately notified of critical activities, such as unauthorized API calls or changes to security group configurations.

Log File Integrity and Encryption: CloudTrail ensures the integrity and protection of log files. Log files are digitally signed, preventing tampering and providing evidence of their authenticity. Additionally, organizations can enable encryption of log files using AWS Key Management Service (KMS) to ensure the confidentiality of the log data.

Centralized Management and Analysis: CloudTrail logs from multiple AWS accounts can be consolidated and analyzed using AWS CloudTrail Insights. This centralized management allows organizations to gain a holistic view of API activity across their AWS infrastructure, identify trends, detect anomalies, and gain actionable insights.

By leveraging AWS CloudTrail, organizations can track API calls, maintain an audit trail of actions, ensure compliance with regulations, enhance security monitoring, and establish accountability for API activity within their AWS environment. It provides a valuable tool for security, compliance, and incident response teams to analyze and investigate activities and maintain a comprehensive record of actions taken within their AWS infrastructure.

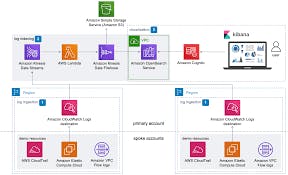

The integration of AWS CloudWatch and CloudTrail with other monitoring and logging tools like Splunk or ELK stack:

AWS CloudWatch and CloudTrail can be integrated with popular monitoring and logging tools like Splunk or the ELK (Elasticsearch, Logstash, and Kibana) stack to provide enhanced capabilities for monitoring, analysis, and visualization of log data. Here's how these integrations work:

AWS CloudWatch and Splunk Integration:

Splunk can ingest data from AWS CloudWatch using the Splunk Add-on for AWS. This add-on allows you to collect CloudWatch logs and metrics, providing a unified view of your AWS environment within Splunk.

By integrating CloudWatch with Splunk, you can leverage Splunk's powerful search and analysis capabilities to gain insights from CloudWatch logs and metrics data.

Splunk can correlate CloudWatch logs and metrics with data from other sources, providing a comprehensive view of your application and infrastructure performance.

You can set up alerts and notifications in Splunk based on CloudWatch metrics, enabling proactive monitoring and alerting for critical events or performance thresholds.

AWS CloudTrail and Splunk Integration:

Splunk can also ingest data from AWS CloudTrail using the Splunk Add-on for AWS. This integration enables you to collect and analyze CloudTrail logs in Splunk.

By integrating CloudTrail with Splunk, you can combine CloudTrail logs with other log sources, such as application logs or security logs, for comprehensive security monitoring and analysis.

Splunk's search and analytics capabilities enable advanced querying, reporting, and visualization of CloudTrail data, helping you identify security threats, audit activities, and track changes across your AWS environment.

AWS CloudWatch and ELK Stack Integration:

The ELK stack, consisting of Elasticsearch, Logstash, and Kibana, provides a scalable and open-source solution for log management and analysis.

Logstash can be used to collect and process log data from AWS CloudWatch. Logstash pipelines can be configured to retrieve CloudWatch logs and send them to Elasticsearch for indexing.

Elasticsearch serves as the search and indexing engine, storing the log data in a distributed and scalable manner.

Kibana provides a powerful visualization and analytics interface for querying and exploring log data stored in Elasticsearch.

By integrating CloudWatch with the ELK stack, you can centralize, analyze, and visualize CloudWatch logs alongside other log sources, enabling comprehensive log analysis and monitoring capabilities.

These integrations with Splunk or the ELK stack offer the following benefits:

Centralized Log Analysis: Integrating CloudWatch and CloudTrail with monitoring and logging tools allows you to centralize log data from various sources, including AWS services and applications, for unified log analysis and troubleshooting.

Advanced Analytics: Splunk and the ELK stack provide powerful search, analysis, and visualization capabilities, enabling you to gain actionable insights from CloudWatch and CloudTrail data.

Correlation with Other Data: By combining CloudWatch and CloudTrail logs with other log sources, you can correlate events, perform root cause analysis, and gain a comprehensive understanding of your application and infrastructure behavior.

Alerting and Monitoring: These integrations enable you to set up alerts, notifications, and dashboards based on CloudWatch metrics or specific log events, ensuring proactive monitoring and alerting for critical issues.

Scalability and Flexibility: Both Splunk and the ELK stack are designed to handle large volumes of log data, allowing you to scale your log management and analysis infrastructure as your needs grow.

Overall, integrating AWS CloudWatch and CloudTrail with tools like Splunk or the ELK stack enhances your log management and analysis capabilities, enabling you to derive meaningful insights, improve operational efficiency, and ensure the security and performance of your AWS environment.

Conclusion:

In conclusion, implementing DevOps practices using AWS services offers numerous benefits for software development and delivery. Throughout this blog, we explored key AWS services that support the DevOps lifecycle, such as AWS CodeCommit, AWS CodePipeline, AWS CodeBuild, AWS CodeDeploy, AWS CloudFormation, and more.

By leveraging these services, teams can streamline software delivery and collaboration, achieve continuous integration and delivery, and embrace Infrastructure as Code. The integration of monitoring and logging tools like AWS CloudWatch and CloudTrail enhances observability and ensures compliance.

The value of implementing DevOps practices with AWS is evident in the automation, scalability, and flexibility it provides. It enables faster time-to-market, improved code quality, and better collaboration among team members.

I encourage readers to explore the vast capabilities of AWS services and leverage them to drive their DevOps initiatives. By harnessing the power of AWS, teams can achieve greater agility, efficiency, and reliability in their software development processes, ultimately delivering value to their customers faster and more consistently.

"Select the hyperlinked words to explore further information on them"